[Note: This was a draft writing originally created in September 2023 after my deep dive into Neurosymbolic AI that summer. Considering the recent advancement in reasoning made by Harmonic, DeepMind, and OpenAI, I thought I’d share this write-up.]

For decades after the establishment of the field of AI, instilling reasoning and problem-solving skills into computers, referred to as symbolic AI, was considered to be the ultimate pursuit. Researchers spent years transferring the thoughtful reasoning processes and rule-based systems into handwritten lines of code, while ensuring their validity through complicated verification methods.

However, despite the collective efforts put in, the advent of powerful compute, big data, and progress in unsupervised learning overshadowed this human-based thinking and led to the prominence of neural networks today.

Neural networks have shown impressive capabilities in leveraging massive amounts of data to recognize patterns or make predictions. However, as seen recently with large language models (LLMs), they have issues with hallucinations and truthfulness. Their implicit reasoning process means they are black boxes, making it impossible for users to understand and trace how the output was generated.

In order to make up for these shortcomings, researchers have explored different methods like prompt engineering, RAG, fine-tuning, and even pre-training from scratch – all with varying degrees of success.

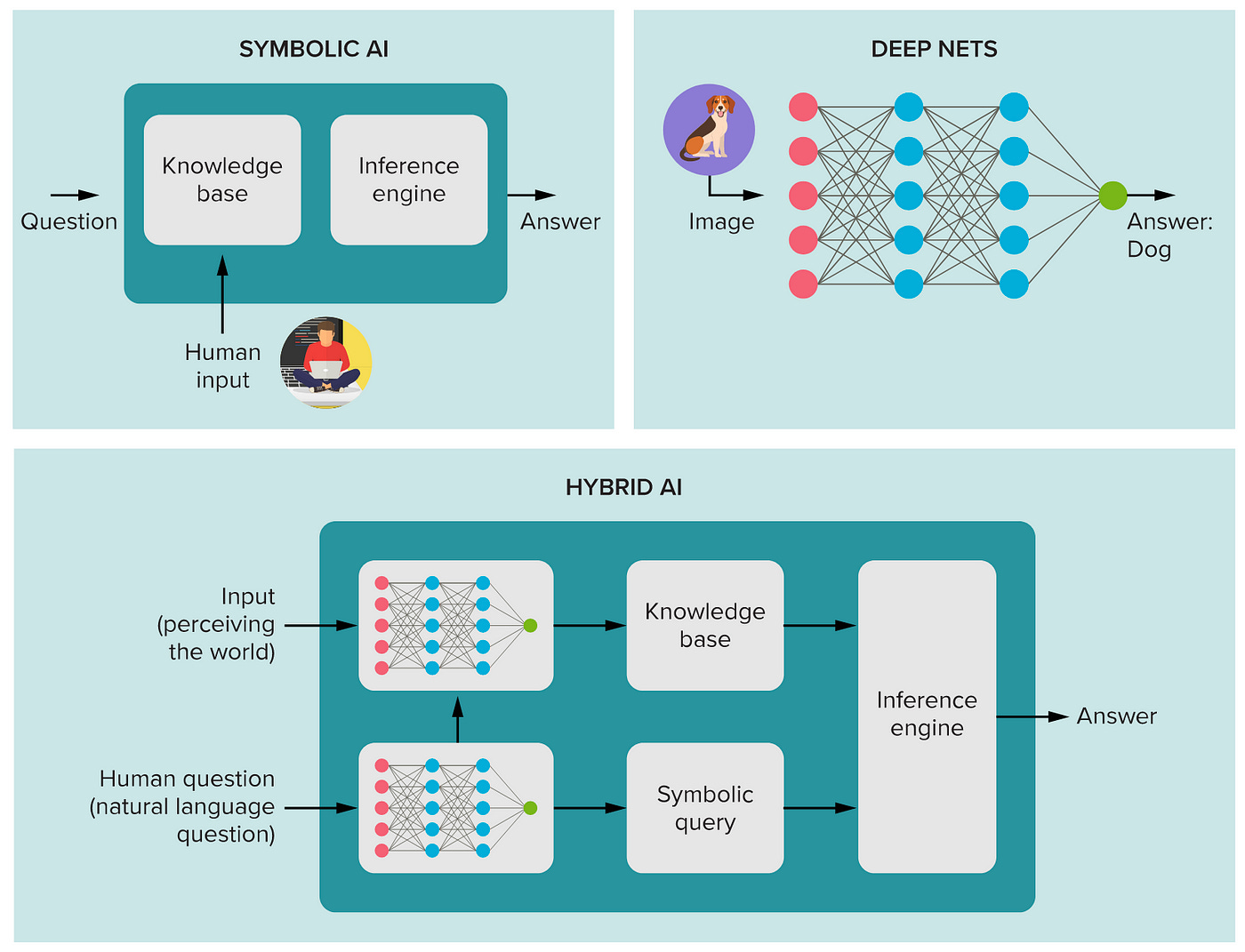

One promising direction we have been looking into tackles the shortcomings at the architecture level through an approach called neurosymbolic AI. Neurosymbolic AI revisits the ideas of symbolic AI, combining it with neural networks to capitalize on their complementary strengths while compensating for their respective weaknesses.

Neurosymbolic AI Overview

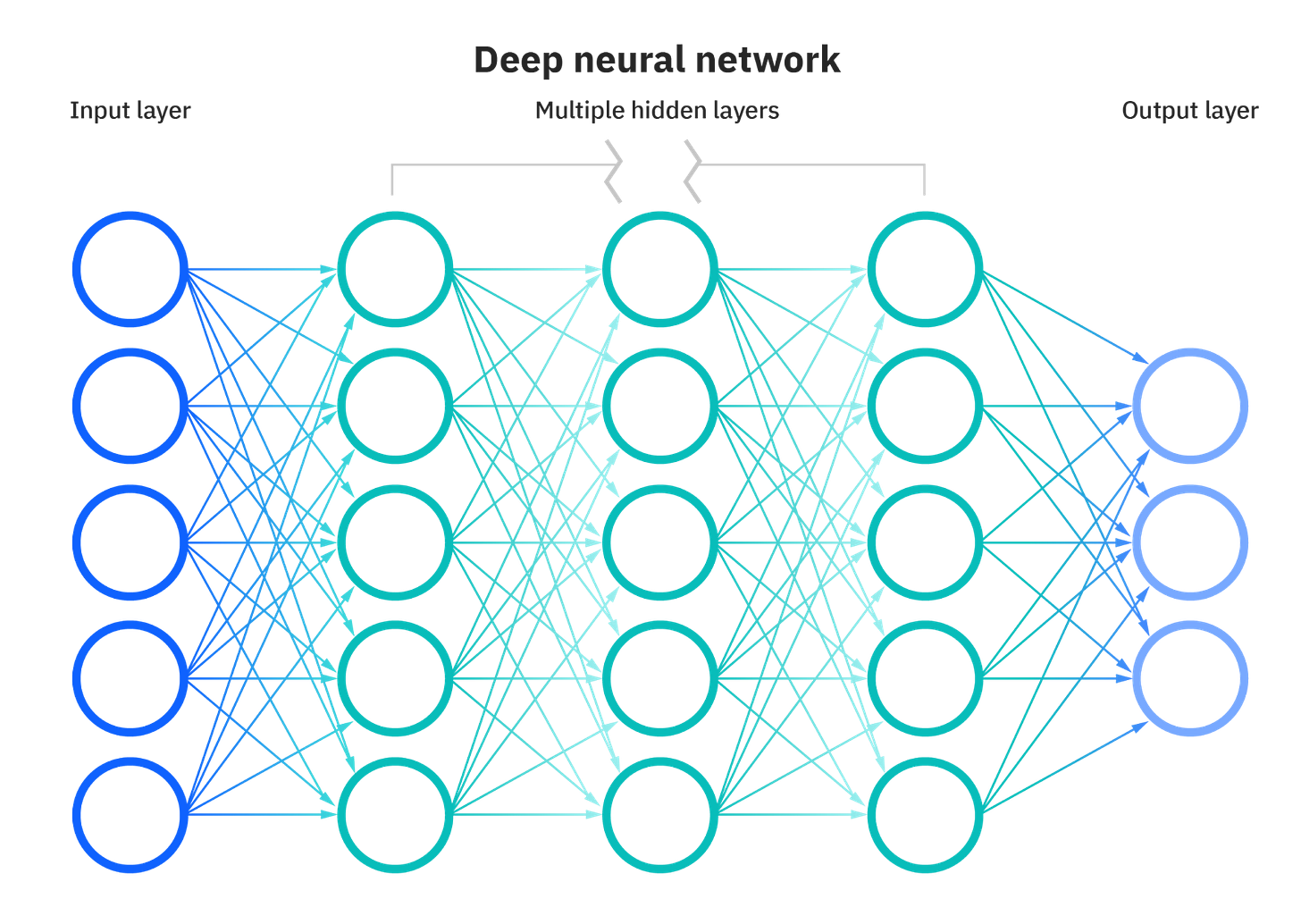

Neural networks are trainable from large amounts of raw data and are powerful at prediction and recognition. Information is encoded as strength in connection between nodes in the form of weights, enabling inputs to be mapped as vector-based, distributed representations – as seen with LLMs.

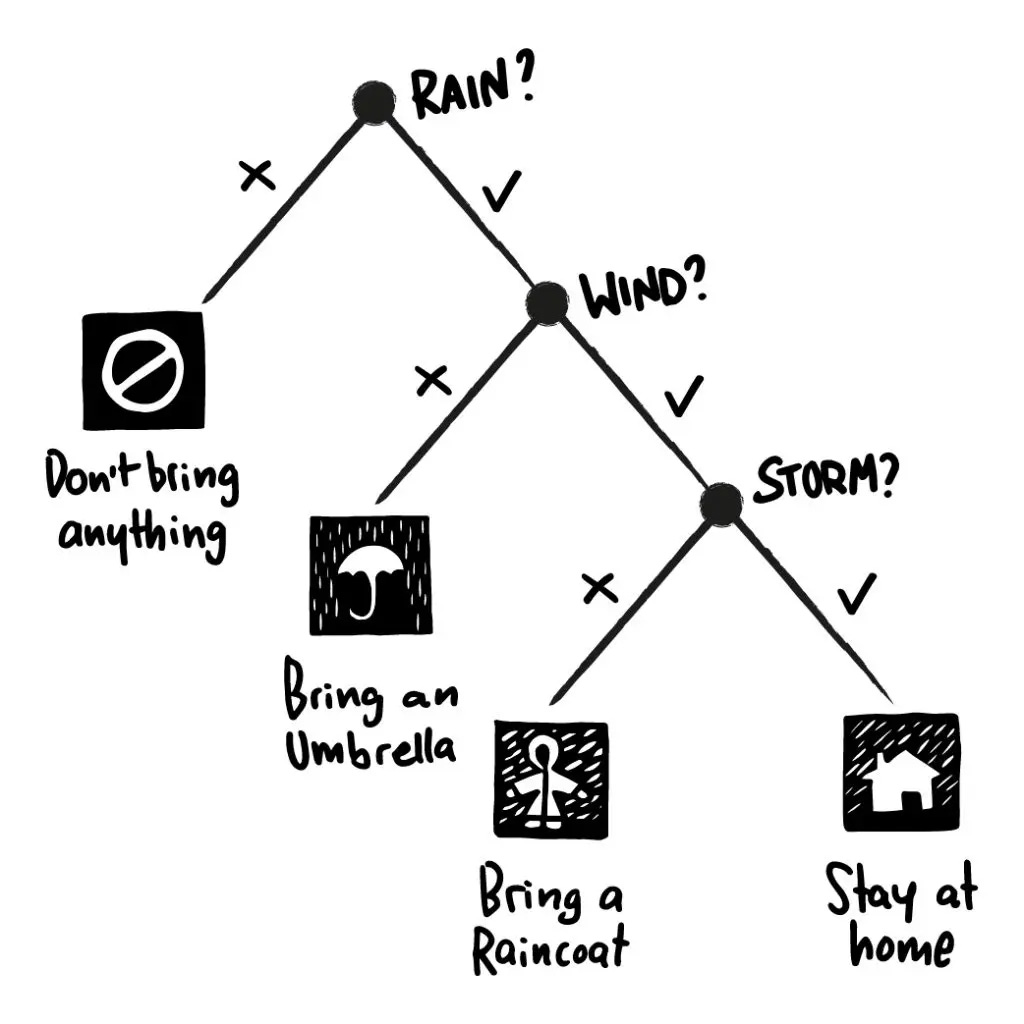

In contrast, symbolic AI uses symbols to represent real-world concepts and their relationships, with logical rules and constraints applied on top. Knowledge is represented in the form of if-then rules, facts, and logic. As a result, they are highly explainable, allow expert knowledge to be explicitly programmed with much less data vs. neural networks, and can perform logical reasoning. Calculators are a form of a symbolic system since the concept of a number is represented as a symbol, with mathematical rules applied to arrive at a result. Another example would be a knowledge or a decision tree.

In neurosymbolic AI, the logic and knowledge derived from symbolic AI is used to drive how the neural network behaves and processes information, or vice versa. During inference, a neural network could call on the symbolic AI to reason and make a decision when it determines that its knowledge is better suited for the task at hand. This reduces hallucinations and provides a traceable step of how the answer was generated. Alternatively, a symbolic representation, such as a decision tree, could be created by the neural network itself as it thinks through on a problem, vastly improving its decision making process.

Startups’ Implementation of Neurosymbolic AI

During the recent Edge Intelligence AI Summit in San Francisco, we spoke with Łukasz Kaiser, one of the authors of the seminal Attention is All You Need paper and current OpenAI Researcher.

Łukasz stated that a systematic approach that utilizes long-form reasoning and symbolic AI holds one of the biggest promises in LLMs: “If you just rely on a single model for the right answer, there is tremendous pressure on it to be extremely performant. But if you can get the model to think through a problem for much longer and pull in symbolic tools to help supplement it, it could be very powerful.” Talking about the state of AI today, he stated that despite access to tools for GPT-4, it is still brittle and not able to fully leverage the tools. Training the model to use these tools from the onset could unlock significant breakthroughs in performance.

Not surprisingly, we have noticed a pattern of startups exploring neurosymbolic AI as a way to tackle the issues of LLM safety and reliability.

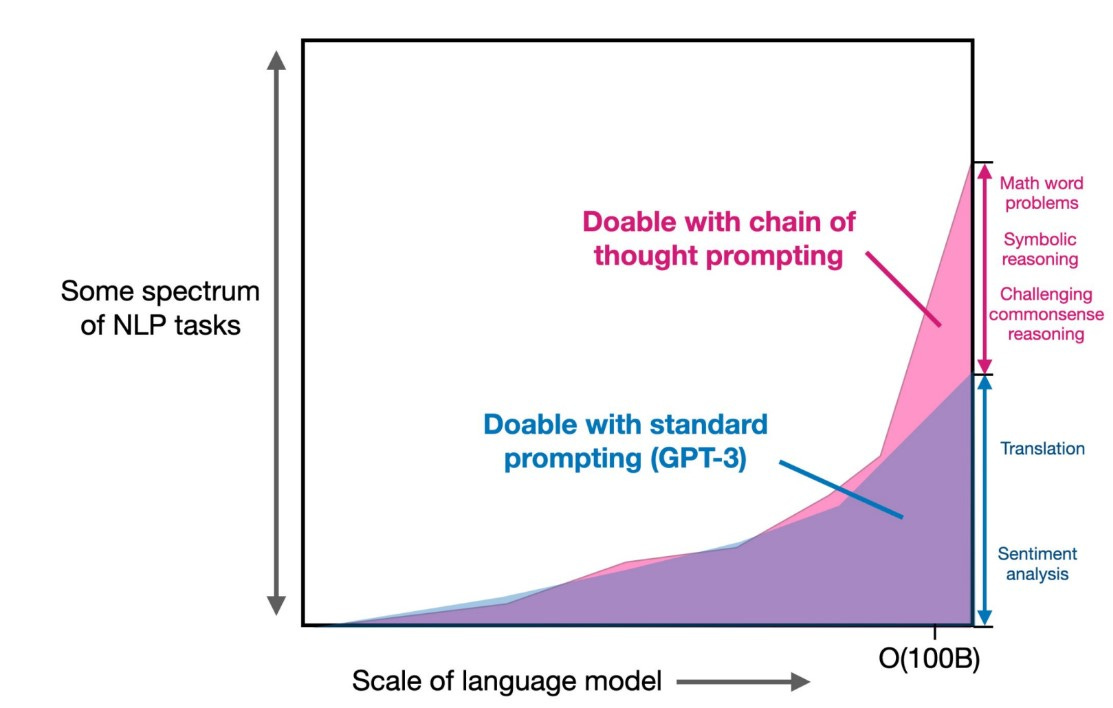

At the most basic level, off-the-shelf LLMs can be used to display certain symbolic characteristics like explicit reasoning, which can improve error rates. Techniques like Chain-of-Thought prompting have shown that 1) prompting LLMs to think through on a question via a series of intermediate reasoning steps and 2) providing a few examples could improve their performance for tasks like word-based mathematical problems. However, they are still limited in that they are completely neural network-based systems with no guarantee of correct reasoning paths, making them susceptible to errors and hallucinations.

(Chain of Thought performance graph - Jason Wei presentation)

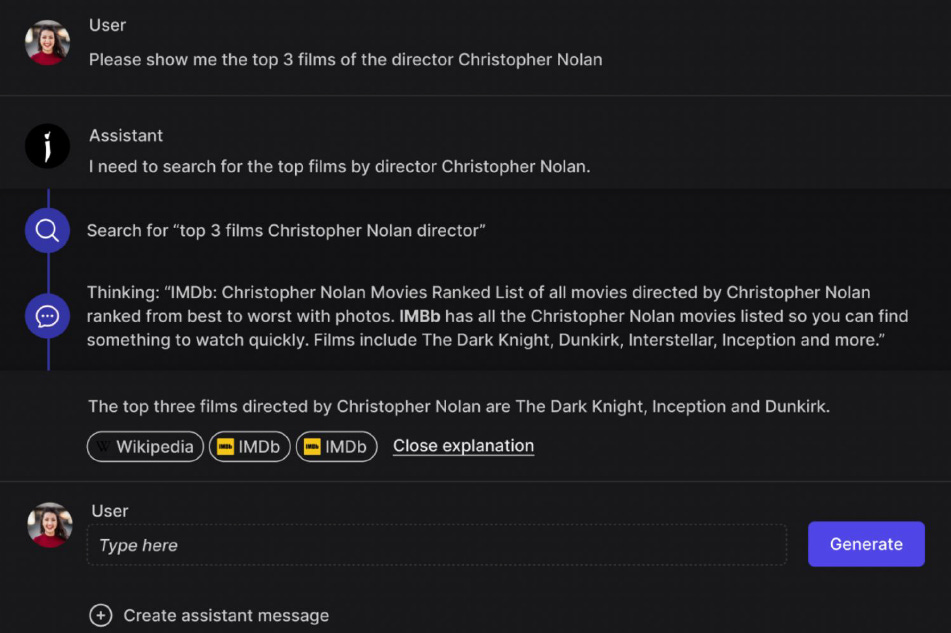

Conjecture, a company founded by ex-Eleuther AI researchers, goes a few steps beyond Chain-of-Thought prompting. They incorporate LLMs as part of a broader system that includes symbolic AI tools. In their “Cognitive Emulation” system, when a user prompts the system, the LLM is used to understand the intent and plan out the tasks that are needed to fulfill the request. If the task requires a tool like a decision tree, search engine, or a calculator, it will send the request to the tool. Using the information it receives from the tool, the LLM then generates a response to the user. This ensures that the “thought process” of the system is explicitly stated and that the responses are based on grounded facts that can be traced back to the source.

DeepMind’s AlphaGo Zero utilizes a more complex neurosymbolic system to improve itself via self-play reinforcement learning. During the training process, the neural network learns by playing against itself over and over again. Using the neural network, it determines the best set of moves to achieve the highest winning probability then uses a symbolic tool in the form of Monte Carlo Tree Search to find even better moves. This results in the system selecting much stronger moves than when using a neural network alone.

Similarly, when we spoke to Unlikely AI Founder and CEO William Tunstall-Pedoe, whose company was acquired by Amazon to create Alexa, he explained that the company takes inspiration from successful implementation of symbolic AI like AlphaGo to improve LLMs. While he did not reveal details of the technology due to the company still being in stealth, he explained that symbolic AI holds the key in alignment, explainability, and safety.

Looking Ahead

Neurosymbolic AI holds promise for alignment and hallucination in LLMs, but beyond this, the benefits could also have implications for edge deployment of LLMs. Being less reliant on the model itself means LLMs could be smaller. Combine that with access to tools, and it opens up more capabilities for smaller models. Furthermore, neurosymbolic AI requires less training data compared to neural network approaches. This means these types of models may be able to effectively learn and generalize from sparse individual usage data, which could potentially enable new applications.

We believe we are still in the early stages of exploring hybrid architectures. Additional research and real life deployment will need to be done in order to make up for the shortcomings, but we believe there could be promising new use cases that come out of it.