Lemons in the Age of AI

The Market for Lemons

The concept of a lemon was first coined by George Akerlof in 1970 as part of his theory that low-quality products and information asymmetry lead to an overall degradation of the market. It was based on his observations of the used car market.

A seller may know the underlying issues about a used car but still decide to sell it at a higher price than what it’s worth. The information asymmetry works to the seller's advantage. When this happens often enough and buyers continue to get burned, they become cautious and less willing to pay even a reasonable price for a car. This downward pressure makes it more difficult for those looking to sell high-quality, well-conditioned cars, eventually driving them out of the market. In the end, only lemons are left behind — the system has collapsed under the weight of too many sellers prioritizing personal gain over being reputable and trustworthy, all enabled by information asymmetry.

For AI research, I suspect we are in the early stages of a similar phenomenon: information asymmetry has led to lemons being revered as peaches. They are then implemented into products, leading to poor end-user experience and degradation of trust.

Research Volume

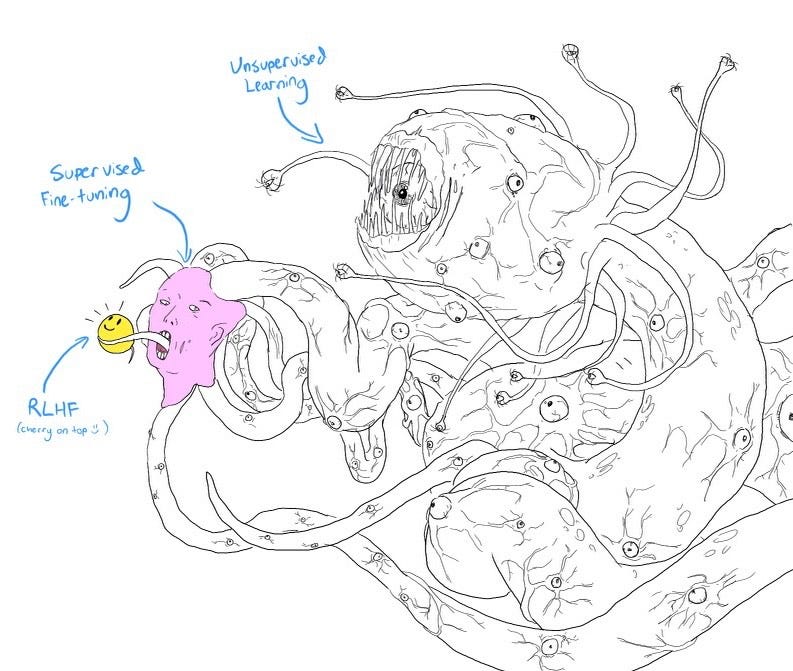

The variability of model architecture means there's a plethora of research topics to explore. Even a text-to-image model like Stable Diffusion can be broken down by a text encoder and a diffusion model, allowing researchers to swap them out with different components. Mix in the variation in parameter size, fine-tuning, quantization methods, and even prompting, and you have an endless combination of chimeras that can be created.

The issue is that as research volume grows, formal peer review becomes more difficult.

We saw the consequences of this in the early days of the pandemic:

Most COVID-19 evidence syntheses described as systematic or rapid review were of low quality and missed out cornerstones of best practice. Less than a half reported critically appraising their included studies, and a third had no reproducible search strategy. Review conduct and publication were rapid. Interest, as measured by altmetrics and citations, was high, and not correlated with quality.

With AI research today, informal Twitter discussions have become the signal. If a paper or article gets an initial burst of attention on Arxiv, whether because of a bombastic conclusion or pre-existing biases of the audience, it can spread rapidly through retweets and likes. This results in an echo chamber that serves as a false signal of the work's actual significance.

The situation becomes more complicated when information is only partially revealed yet a product that is released based on the research gains significant popularity. Consider the black-box nature of LLMs, and even the author may not realize the model’s capabilities and biases.

Public and Private Shortcomings

When these lemons do make it into a product, we see issues like what happened with Microsoft’s Bing and Baidu’s Ernie.

GPT-powered Bing giving the wrong answer about a company’s earnings or Ernie failing to recreate a video shown in a demo are all but minor annoyances for both companies and their users. Currently, the issues we have seen with these models have been minor.

However, I expect these issues to get worse as the technology gets used in high-stakes settings, discreetly.

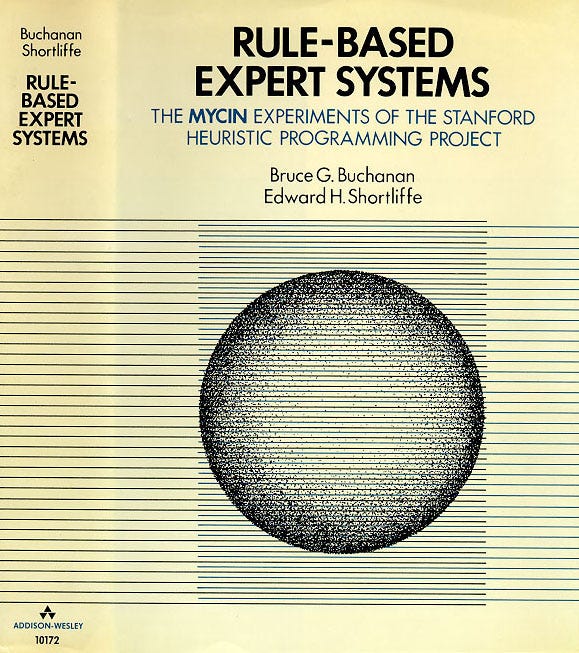

People often blamed ethics as to why Stanford's Mycin — an AI tool for diagnosing and treating bacterial infections — wasn’t widely used by doctors when it came out in the 1970s, despite its human-level performance. But I would argue that the bigger barrier was the lack of access to computing power. With the advent of smartphones and cloud computing, it seems inevitable that people will start experimenting with similar tools.

As a patient, I would want my doctor to be explicit with me if he used an AI-powered tool to diagnose my condition. A simple PDF that outlines the collaboration between the doctor and the AI, the thought process, and the statistics on the chance of a correct diagnosis would be vastly better than a doctor informally inputting symptoms into ChatGPT during his downtime at work and having the result affect his diagnosis.

With an explicit outline of the model’s limitation — rather than a disclaimer from a chatbot claiming that its features are not unsuitable for medicine — this tiptoeing would not occur. Inaccurate extrapolations would be prevented.

Reverberations

Releasing AI tools with limited transparency poses risks that are difficult to anticipate or manage effectively, in spite of potential short-term benefits. Unforeseen issues could emerge as systems are applied more broadly in more complex, diverse scenarios than tested. It also becomes hard to determine responsibility if problems do arise from an opaque system. Regulations also become harder to implement.

The consequence of continuing on our current path will be much more significant and rear its ugly head in the form of Gresham's law, with “bad [research] drive[ing] out good”.

Imagine a scenario where a doctor somehow finds a way for Alpaca to answer a question about his patient’s condition. The misdiagnosis, incorrect prescription, and resulting harm could put massive, short-sighted regulatory pressure on AI companies.

Congressional testimonies will be held with hilarious questions thrown at Sundar and Zuckerberg. But what may not be so laughable will be the pullback in open research, leading more companies to clam up and withhold information from the public for fear of backlash.

This in turn will lead to more lemons affecting the direction of research, albeit behind closed doors.

Just think of how Kaplan’s scaling law, released by OpenAI, was proven to be incorrect due to a mathematical error. For nearly 2 years, researchers were obsessed with increasing the parameter size of the model. It was only when DeepMind’s Chinchilla paper showed that linearly scaling data along with the parameter size (20:1 tokens to parameter ratio) improved performance, that Kaplan’s law was thrown to the wayside. Incredibly, this was all despite the research being open.

Imagine what would’ve happened if details were withheld.

Path Forward

There is no simple solution to this issue. Both open and closed research merit consideration. Progress breeds consequence, however gradual. Change, though promising, faces resistance from established ways.

A fully transparent system, both for research and product, could be one solution to the problem. However, we seem to have made so much progress in our old ways that a radical change may turn out to be too disruptive.

Is this the cost of progress? Maybe. Still, I do think some form of change is in order, especially when considering the chain of events that might unfold.

While I can speculate, I feel hesitant to criticize or prescribe solutions — I am not the man in the arena. But this issue of lemons was something that I had been pondering over for the last few months. The lack of an obvious solution made me hesitant to release this essay, and despite my hope that putting it down in writing would help me in finding one, it has not quite happened.

Many thanks to Sophia Shi for her invaluable feedback.